By Loring Wirbel and Lou CoveyA New Tech Press Report from Footwasher Media

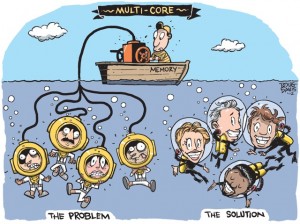

For the better part of two decades, the processor industry has been running pell mell down the road of multicore design, packing more and more processor cores on a single chip. But a funny thing happened on the way to the personal super computer. It didn't work.

In 2007, a DARPA study on the potential of an exascale computer concluded that with the current architecture of processors, in other words x86 and PowerPC, we could not get there from here. As a result, in January 2012, DARPA announced the Power Efficiency Revolution for Embedded Computing Technologies, (PERFECT), to figure out what to do next.

Dr. David Patterson, a RISC pioneer and a leading voice in the development of multicore processors, suggested that the problem could be solved using FPGAs as an experimentation platform in a program he called Research Accelerator for Multiple Processors (RAMP) at UC Berkeley. In a series of RAMP presentations. In a related 2006 Berkeley white paper, ‘The Landscape of Parallel Computing Research: The View from Berkeley,’ Patterson said that the power consumption of the logic in the CPU, converted into heat, limits the performance. Since any heat that cannot be removed by a heat sink reduces the performance of the transistors, the results are:

- If you increase the system clock to boost performance, heat rises, transistors slow down

- If you increase memory bus width and you increase the number of transistors, heat will increase and transistors slow down

- If you increase instruction-level parallelism (ILP) so more can get done at the same time, you increase the heat and...

The result of the RAMP effort? "The memory wall has gotten a little lower and we seem to be making headway on ILP, but the power wall is getting higher," Patterson said. One anonymous engineering wag pit it more succinctly,

"We're screwed."

Throughout this process, however, there have been voices, crying by the roadside as it were, "Go back! you're going the wrong way!" And it may be time for those voices to be heard.

Going back to the turn of the century, companies like the UK-based Celoxica, were pointing out the weaknesses of the multi-core approach in contrast to a heterogeneous approach incorporating FPGAs.

"The first problem is the architecture of the standard processor doesn't lend itself to parallelism," Said Jeff Jussel, former VP of marketing for Celoxica and current senior director of technical marketing for Element14. “No matter how many processors you put on a chip, we are not seeing any one algorithm processing faster because it is too hard to program access to all the processors. What you end up with is a system that can do 12 things, but no actual system speed increase with an incredible power increase."

Celoxica's approach, according to Jussel, was to break up the algorithm over multiple processors inside the FPGA with dedicated memory. "You end up with millions of tiny processors optimized for the algorithm. When the algorithm changes, you just reprogram the FPGA."

At the time, the problem was not immediate and the market was entrenched. Celoxica ultimately spun off their tool business, eventually landing in the hands of Mentor Graphics and kept their board development business. That business was always focused on one-off applications, ranging from mining to financial services.

Patterson said their work in RAMP showed that an FPGA approach, especially for highly focused applications, was "more and more attractive" but there were two specific obstacles: power and design tools. "We found the CAD tools available were just not that easy to work with. We were actually surprised at how difficult it was and it formed a major stumbling block. And while FPGA providers have gotten better with power even as the number of transistors increase, they still need to get better with it before it can be a mainstream answer."

The reprogrammable nature of an FPGA has allowed several board- and system-level companies, ranging from Wall Street FPGA in financial analysis markets to Convey Computing in scientific analysis, to assign ARM cores or small hard-configured RISC blocks like MicroBlaze, to a variety of tasks, with subroutines handed off to coprocessors on the same FPGA. But the dream of a fully retargetable FPGA system, touted in the mid-2000s by companies like Quicksilver, has been largely deferred because of the problem of developing parallel multithread software for such changing architectural types.

Think "many" not "multi"

ARM walks into the fray almost in a position of neutrality. While it still endorses the validity of Intel's homogeneous approach to multicore, as early as last fall it began discussing a "many core" as opposed to multicore approach. According to John Goodacre, program manager in the ARM Processor Division, the traditional approach of using full-performance cores still has a long road ahead of it, especially when you are considering dual- and quad-core designs, but it may not be necessary, especially in some consumer applications to use the large cores.

"Mobile applications are full of little processes." Goodacre explained."If you put all those processes into four or eight big cores, you don’t actually see a big performance improvement, but you see quite a big negative power impact. A Many-/multi-processing approach duplicates the capability of a big homogeneous multicore design that is inherently more power efficient."

Goodacre points to ARM's big.LITTLE concept that marries an A15―which he claims is capable of running more of today’s dual-core type software, with four small A7 cores, in a power efficient formation.

"This approach is mostly targeting toward power, but it’s also giving the next generation programmers the concept that there’s also a lot more power efficient processes available for that next generation of software. The first next generation software I anticipate will be in gaming, but as time progresses and more and more availability of more cores, there will be more software available."

From the software side

Architecture experts developing RISC instruction sets for a mix of server and embedded applications – dominated by ARM, but also including MIPS, Tensilica, and other companies – have offered their cores to standard IT developers, to FPGA and ASIC vendors, and to embedded specialists. Xilinx and Altera, among other FPGA vendors, say they see a mix of SMP and asynchronous RISC implementations. Some ARM licensees, including Freescale Semiconductor, Texas Instruments Inc., Qualcomm Inc., and Broadcom Corp., utilize ARM as part of non-SMP designs that use a central control-plane processing environment, in conjunction with on-chip coprocessors for functions such as encryption, deep packet inspection, and fast list searches for tasks such as routing.

See the full story at element14.com and additional coverage at EDN.com.

As multicore processor design hits power, memory and ILP walls with increasing frequency, the established methodology is pinning much hope on 3D heterogenous approaches. Those efforts will be described in detail in a series of best practices tutorials at this year's

As multicore processor design hits power, memory and ILP walls with increasing frequency, the established methodology is pinning much hope on 3D heterogenous approaches. Those efforts will be described in detail in a series of best practices tutorials at this year's