Alternative energy is a huge industry generating and spending money at breathtaking speed. Governments have invested trillions of dollars in building out the industry infrastructure and thousands of private citizens have invested billions in private applications. And yet two-thirds of our electrical energy is generated by fossil-fuel burning technology, just as it was 20 years ago. With all the infrastructure established in the past two decades, the world's demand for energy has outstripped our ability to meet it with alternative power.

It's time we admitted that alternative power is not an alternative. It is only a supplement. Once we admit that fact, we may be able to get to work actually finding a real alternative.

It's time we admitted that alternative power is not an alternative. It is only a supplement. Once we admit that fact, we may be able to get to work actually finding a real alternative.

This series had its genesis more than 40 years ago with my first foray into investigative journalism; a seven-part series on alternative energy as it was in the 1970s. The conclusion of that original work was that alternative energy lacked the ability to meet the world's needs, much less what in wanted for energy. After 40 years of following the industry I have found it is not much different now. The technology, while more efficient and cheaper now, is still not sufficient to meet demand and probably never will on our current direction. Multiple reports predict that our energy usage will triple in the next 15 years. If “alternative” energy can’t keep up with the need today, how bad will things be in 15 years and beyond?

In this new series we will look at various sources of alternative power; the good, the bad, and the future of each technology, and it will conclude with a look at what might be possible today if only we look outside of the box we have created.

Today we set the stage for where we are.

There have been two contradictory articles in the Washington Post recently. The first stated that the cost of wind and solar have come down dramatically and are close to being on par with coal and oil generation in the cost per megawatt. The article indicates that with those dropping prices, it should make it easier to meet the demand for energy using alternative sources. What the article doesn't state is that the cost is largely achieved through government subsidy, most of which are going away soon, not just in the US, but everywhere in the world. Take away the subsidy and the price will skyrocket.

The second article, however, paints a very different picture. In 1990, two-thirds of all our power production came from coal, oil and natural gas generation plants. That was the beginning of the modern alternative energy industry as subsidies started growing. What also continued to grow was the world's demand for electricity, much of which is driven by the computing industry with always-on computers and data centers, the latter consuming 10 percent of all electricity generated. That demand has required additional generation from carbon-fuel technology to the point that after trillions of dollars in investment in alternative sources, coal, oil and gas still account for two thirds of all generation.

Much has been made of Europe's advances in alternative power. The Netherlands recently announced that their ocean-based wind farms delivered more than 100 precent of their power needs on one day this year and some countries are claiming that 50 percent of their daily needs are often provided by alternative power. What is not discussed is how those alternative sources inconsistent.

Wind produces power when the wind is blowing within a specific narrow range of speed. If the wind speed is too low the turbines don't turn. Too high and the turbine has to be stopped to keep the blades from warping due to the torque placed on them. Solar produces power for 6 hours a day at best, during the summer. That works out great for Spain which gets a lot of sun in the spring, fall and summer. It's not great for Sweden which gets virtually no sun for several months in the year. Bottom line: sun and wind are just not always available.

As a result, Europe is quietly buying coal from the United States so they can gear up their older power plants to provide electricity on a consistent basis. This is good for the US since its coal reserves rival Saudi Arabia's oil reserves. The coal industry has seen US demand drop and harsher regulations keep electricity production from coal severely limited, but at the same time, natural gas is enjoying a rapid increase in demand.

California has been crowing about the rapid increase of its alternative energy production and is predicting that 50 percent of all power produced in the state will be from alternative sources by 2030. That is completely likely as the state closes nuclear and carbon-fuel plants, but California currently imports 30 percent of its power from states producing energy surpluses from coal-burning plants. Part of the problem is that even in perfect conditions, some of the most touted technologies are not producing as expected.

For example, there is a massive facility in Ivanpah, California using acres of reflective panels to focus solar radiation on a single column placed in the center of the facility. The heat turns water to steam which in turn drives traditional turbines to produce electricity. The problem is that the facility is not producing power solely on solar power. They have had to bring in natural gas to supplement the heat source and get the facility up to its promised capacity. The problem lies in the turbines, which are rated at 33.3 percent theoretical efficiency, but in reality operate at 25 percent efficiency.

That brings in the issue of utilities that have the responsibility of meeting power demands from the population. The alternative energy industries promised the utilities that they would have a source of home-produced electricity by using the roofs of customers for solar and wind power. Over the past 10 years that source has proved to be wildly unpredictable and required the utilities to keep current, carbon-fuel plants spun up to 110 percent, just in case the solar/wind production drops off, which happens more often than not. There have been multiple lawsuits going back and forth across the country as utilities and private home owners find that the promises of the alternative energy companies cannot be met with current technologies. There is also an investigation underway by the US Department of the Treasury against several large alternative energy companies regarding over-valuing technology for tax purposes.

When all the facts are in view, the alternative energy industry, in fact, the entire energy sector is in serious disarray. There is hope, but only when we have a realistic view of what is is actually happening.

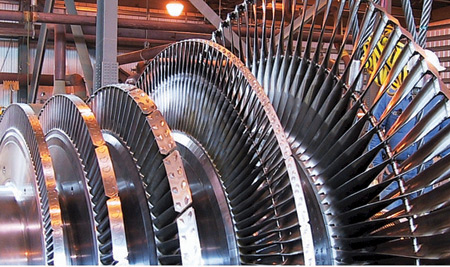

At the core of the difficulty will be the centerpiece of all energy conversion: the turbine. Turbines are used in traditional energy plants run on coal, oil and natural gas, but they are also used in hydroelectric, geothermal, solar concentration (like Ivanpah), tidal, wind and waste-heat conversion. Without a thorough rethinking of turbine design, we will be hard pressed to find a true alternative.

This series will look at all forms of energy production, from fossil-fuel to experimental concepts and everything in between. We will begin, next, with a look at the problem of turbines.

Sponsored by 3DP-international

It's time we admitted that alternative power is not an alternative. It is only a supplement. Once we admit that fact, we may be able to get to work actually finding a real alternative.

It's time we admitted that alternative power is not an alternative. It is only a supplement. Once we admit that fact, we may be able to get to work actually finding a real alternative.