The conclusion of a year-long New Tech Press investigation of the employment industry shows is it is failing job seekers, employers and the investment community. Some of these services can point to a few areas of success and some are better than others, none are particularly effective. In fact, if a job seeker were to place on roulette bet or buy one lottery ticket for every job they apply for on a job listing service, they would be more likely to win a living wage from gambling than job searches. Employers and the employment industry rely on the Standard Occupation Codes (SOC) and North American Industry Classification System (NAICS) to form the foundation of job descriptions. In our interviews with HR professionals and system developers we discovered that when they are creating job descriptions they consistently overuse and misuse the coding system to pull in the most candidates, even those that are unqualified.

Second, the “artificial intelligence” built into the systems is not much more than a scan of those codes and word search functions, resulting in bad returns.

In making this discovery we input the following job functions into a dozen job-finding sites (e.g. Monster.com) and employment sites (e.g. Facebook)

- Journalist

- Editor

- Marketing communications

- Marketing manager

- Audio design

- Video editing

- Program management

The results produced, at most, no more than two positions that were actually for those jobs and hundreds of positions that ranged from electronic system designs to sous chefs. The most egregious example is a standard search for audio designer jobs. This particular job is crucial in television, movie, and video game industries and is the key focus for the broadcast and electronic communications major at San Francisco State University. Using that job title in a search for jobs in a dozen job finding sites (e.g. Monster.com) resulted in more than 5,000 positions for electrical engineers and computer scientists and not a single job for an actual audio designer.

To see why we received these results we dug into to SOC and NAICS codes appended to the job offering and found dozens in codes that had absolutely nothing to with the listed jobs. The reason for these errors was simple: When a company is looking for employees they input the codes related specifically to their industry or discipline. When a tech company, like Google, is looking for an engineer to design an audio codec. They append the NAICS 541400 code for specialized audio systems design to the posting. The systems and HR professionals do word searches for “audio” and “design” and make the posting. So an audio design engineer who produces sound, get thousands of job listings for semiconductor designers who have experience in audio codecs.

Neither the NAICS nor the SOC systems are adequate sources of data for effective job placement efforts, yet these codes are foundational to all job postings.

The next problem is human fallibility.

In spite of the flood of automated job sites and technology, all of which we have found to be horribly flawed, the HR industry is dependent on humans that are deluged by unqualified applicants fed to them by the flawed technology. Thos professionals are well versed in the legal requirements of their profession but lack basic tech understanding and and under use relevant technology.

We talked to 27 in-house and independent recruiters over the past year, some of them senior HR managers and vice presidents. None of them knew the capabilities of the search technology, much less the full capabilities of artificial intelligence. Many of them were flummoxed about the use of simple spread-sheet tools. As a result they do not use the basic automation tools available even for free and are generally overwhelmed by the amount of communication they have to handle daily from employers and potential employees. One manager for a major marketing automation system company did not use her own company’s technology for job openings. In another case, a recruiter for a social media company did not even have a profile on the company platform.

The combination of bad and misused data, and lack of basic tech understanding results in an ineffective mechanism for matching qualified people with jobs and, hence, the high number of qualified people leaving the job market altogether.

As frustrating as this may be for employers and job-seekers, it must be even more frustrating for the investment community. More than $4 billion has been invested in HR tech startups in the past two years and there is no end in sight. Companies and seekers are paying subscriptions to these companies in the millions of dollars and yet with little positive results. When combining the current unemployment rate with the number of people who have left the job market, the effective unemployment rate in the US is 40 percent, with no relief in sight.

What can be done? Start with the data.

The SOC and NAICS codes were not intended to help people find jobs. They were designed to classify maintain records of employment for the purpose of population studies and taxation. Using them for job openings is a gross misuse of the data. The HR industry needs to employ experienced communicators and data scientists to develop and sort job opening data. The communicators can create accurate and realistic offerings and the data scientists, using sentiment analysis, can identify and process the recruits more effectively.

Secondly, marketing automation and proper SEO can attract, sort and communicate effectively with applicants making HR professionals more efficient and productive by eliminating frustration and wasted effort.

Finally, employers need to have an attitude adjustment regarding what they are looking for in potential employees. As the unemployment rate falls, it will be more difficult for overly selective companies to find productive employees. Instead of investing in tech based on bad data, invest in training of HR staff to do better work.

It's time we admitted that alternative power is not an alternative. It is only a supplement. Once we admit that fact, we may be able to get to work actually finding a real alternative.

It's time we admitted that alternative power is not an alternative. It is only a supplement. Once we admit that fact, we may be able to get to work actually finding a real alternative.

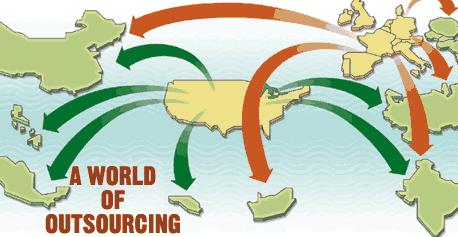

away from one country in favor of another. As the practice has matured, it has become more of a zero-sum game as long as the participant realize it is best as a cooperative exercise.

away from one country in favor of another. As the practice has matured, it has become more of a zero-sum game as long as the participant realize it is best as a cooperative exercise.

She has a point. There have been relatively few people who have actually experienced personal financial loss. For example, just last week, Target announced a settlement of $10 million for the breach that compromised the data of more than 100 million people -- 10 cents for each victim, not counting legal fees.

She has a point. There have been relatively few people who have actually experienced personal financial loss. For example, just last week, Target announced a settlement of $10 million for the breach that compromised the data of more than 100 million people -- 10 cents for each victim, not counting legal fees. Which may be why some leading figures in the industry tell consumers they are pretty much on their own. Herjavec Group Founder & CEO, Robert Herjavec discussed the recent and massive breach of Anthem in a recent

Which may be why some leading figures in the industry tell consumers they are pretty much on their own. Herjavec Group Founder & CEO, Robert Herjavec discussed the recent and massive breach of Anthem in a recent  The hack and subsequent terror threat of Sony Pictures laid bare the inherent weakness of cyber security in the world. Even the most powerful firewall technology is vulnerable to the person with the right user name and password (credentials).

The hack and subsequent terror threat of Sony Pictures laid bare the inherent weakness of cyber security in the world. Even the most powerful firewall technology is vulnerable to the person with the right user name and password (credentials). New Tech Press has been looking at this trend for the past few months and will begin publishing a series of articles and interviews beginning this month and running deep into 2015. What has become clear is outsourcing falls into distinct groups: Multinational enterprises providing soup-to-nuts services for large customers, foreign national organizations targeting US and Europe corporations and “blended” suppliers that feature local management with foreign-based resources. The latter two often provide unique specialization in design and industrial niches, like security, automotive and web design.

New Tech Press has been looking at this trend for the past few months and will begin publishing a series of articles and interviews beginning this month and running deep into 2015. What has become clear is outsourcing falls into distinct groups: Multinational enterprises providing soup-to-nuts services for large customers, foreign national organizations targeting US and Europe corporations and “blended” suppliers that feature local management with foreign-based resources. The latter two often provide unique specialization in design and industrial niches, like security, automotive and web design.